In the previous post, I described our level 2 evaluation of CJK resource discovery, and noted a significant blocker to moving forward: the CJK improvements we were making broke current production behavior.

CJK Indexing Breaks Production?

I call our sw_index_tests relevancy tests, because these tests submit Solr queries to our index and evaluate the results for "correctness." In a sense, though, they could be called regression tests - changes to the Solr index shouldn't break any of these tests. Of course, they do break sometimes when records are added to or removed from our collection, but we try to keep them from being too brittle without sacrificing much test precision.Let me step back a minute and give a bit of context. Our data is almost entirely bibliographic metadata in the MARC format, and frequently contains data in scripts beyond English. When data is in a different script, the MARC 21 standard expects it to be put in a linked 880 field. SearchWorks has always indexed the data in the 880s, and has always recognized that the data isn't English, and therefore should not be stemmed or have stopwords applied. This is the fieldtype definition we use for these linked 880 vernacular script fields:

We also use this field type for unstemmed versions of English fields, so we can sort more exact matches first. For example, we index the title both stemmed and unstemmed, and we boost the unstemmed matches more than the stemmed ones so they are scored higher.

Note that the textNoStem fieldtype uses the WhitespaceTokenizer, so tokens are created by splitting text on whitespace. When we spun up SearchWorks five years ago, we wanted to have behaviors like those below (all of these are from sw_index_tests) for non-alpha characters due to specific resources in our collection. You even can see the bug tracker (jira) ids in many of the tests as tags.

numbers mashed with letters:

hyphens:

musical keys (also here):

programming languages:

As you've probably guessed, what the above tests all have in common is that they failed when we changed our textNoStem fieldtype definition to use a Tokenizer that assigns a CJKBigramFilter friendly token type. So if we used this fieldtype definition instead:

we got a number of test failures:

Most of the failures we got by substituting the text_cjk fieldtype for textNoStem are due to hyphens, programming languages with special characters, and musical keys.

Let's use the handy-dandy field analysis GUI to see what happens to a string with these special characters when it passes through the textNoStem fieldtype:

and what happens when we pass the same string through the ICUTokenizer:

Note that all of the non-alphanum characters have been dropped by the ICUTokenizer. The same happens with the StandardTokenizer:

So it seems we are unlikely to have a one-size-fits-all fieldtype definition that will work for CJK text analysis and also for non-alphanum characters. What should we do?

Context-Sensitive Search Fields

Recall that as discussed in previous posts, we need to use a different mm and qs value to get satisfactory CJK results, but we want to keep our original mm and qs values for non-CJK discovery. So we are already on the hook for the SearchWorks Rails application to recognize when it has a user query with CJK characters in order to pass in CJK specific mm and qs values. I realized that I could make CJK flavors of all qf and pf variables in the requestHandler in solrconfig.xml, like this:So now when our Rails application detects CJK characters in a user's query, it needs to pass in special mm, qs, qf, pf, pf3 and pf2 arguments to the Solr requestHandler. So a normal Solr query for an author search might look like this (I am not url-encoding here):

q={!qf=$qf_author pf=$pf_author pf3=$pf3_author pf2=$pf2_author}Steinbeck

while a Solr query for a CJK author search would look like this (scroll right to see the whole thing): q={!qf=$qf_author_cjk pf=$pf_author_cjk pf3=$pf3_author_cjk pf2=$pf2_author_cjk}北上次郎&mm=3<86%25&qs=0

You may already be familiar with Solr LocalParams, the mechanism by which we are passing in qf and pf settings as part of the q value above.Now, how do we test this? Does it work?

Applying LocalParams on the Fly

First, I wanted to try this new approach in our relevancy tests to see if it worked. If you look at this test, you'll see there isn't anything in the test itself indicating it uses CJK characters:

This test uses the magic of rspec shared_examples, which were employed to DRY up the test code. The shared example code looks like this:

The method called by the shared example is in the spec_helper.rb file. I'll do a quick trace through here:

So the cjk_query_resp_ids method calls cjk_q_arg, which, for our author search, calls cjk_author_q_arg:

which clearly inserts the correct cjk author flavor qf and pf localparams.

What about mm and qs? These are added later in the helper code chain; the following method is also called from cjk_query_resp_ids:

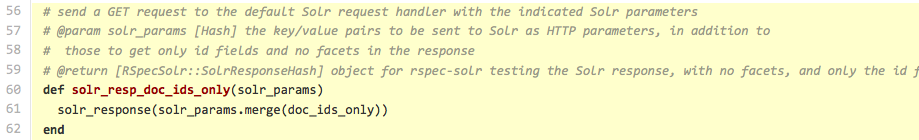

which in turn calls the solr_response method:

Detecting CJK in a query string

What I've shown above is that my CJK tests use a method, cjk_query_resp_ids, to ensure the correct CJK localparams are sent in to Solr. But I haven't shown how to automatically detect if a query has CJK characters.Look again at the solr_response method. It first calls a method that counts the number of CJK unigrams in the user query string, which is a snap with Ruby Regexp supporting named character properties:

If CJK characters are found in the user query, then solr_resp calls a method that returns the mm and qs parameters:

Even if you didn't follow all that, we definitely end up with the following being sent to Solr:

q={!qf=$qf_author_cjk pf=$pf_author_cjk pf3=$pf3_author_cjk pf2=$pf2_author_cjk}北上次郎&mm=3<86%25&qs=0

Using all that helper code above, and a bit more for other types of searches (title, subject, everything ...), I can assert that we did get all our production tests to pass as well as all our CJK tests -- all the issues with hyphens and musical key signs and so on went away. Yay!

(I want to mention that the layers of indirection in that code are useful for other contexts than the particular test illustrated.)

Context-sensitive CJK in Our Web App

Great - our tests all pass, and the CJK tests we have pass too! But what about repeating all that fancy footwork in the SearchWorks web application? We need to get the correct requests sent to Solr based on automatic CJK character detection in user entered queries.In fact, the SearchWorks Rails application uses a lot of the same code as what I use for our relevancy tests. Let's go through it.

As you know, SearchWorks is built using Blacklight, which is a Ruby on Rails application. It all starts with a "before_filter" in the CatalogController:

Which is really a method that calls another method and adds the result to the existing Solr search parameters logic (Blacklight magic):

The cjk_query_addl_params method checks the user query string for CJK characters, adjusts mm and qs accordingly, and applies the local params as appropriate for CJK flavored searches:

The two methods I highlighted with purple boxes above are exact copies of those same methods in our relevancy testing application. They live in a helper file, because they are used to tweak advanced search for CJK, which will be addressed in a future post.

We put these changes in a test version of the SearchWorks application, asked the CJK testers to try it out, and it all worked! Also, we have end-to-end test code for the application that ensured these app changes didn't negatively impact any existing functionality while it did afford better CJK results.

D'oh!

In the course of writing this post, I realized that I probably can use the same fieldtype for non-CJK 880 text and for CJK 880 text; I may only need to distinguish between un-stemmed English fields and all vernacular fields, rather than between CJK and non-CJK 880 text. Since this approach could reduce the number of qf and pf variables we have in our solrconfig.xml files, I may experiment with it in the future.Where are we Now?

This post showed how I removed our blocker to putting CJK discovery improvements into production: how I analyzed how CJK Solr field analysis was breaking production behaviors, my chosen solution, and how we tested and applied that solution.With the blocker removed, and two of the three items on the "must do" list fixed (see previous post), we asked the testers via email to vet our work. They agreed we had fixed the intended problems and not broken anything else. At this point, we worked out a schedule to push these fixes to production.

Well, in reality, one of the fixes on the "should do" list went to production as well, and we agreed that getting advanced searching working for CJK could be a "phase 2" improvement. Future posts will address all the other improvements: fixing advanced search for CJK and the four other tweaks we added to improve CJK resource discovery (not necessarily in that order). And of course we will share the final Solr recipes and our code (already available via github).

I should have mentioned that our synonyms for computer languages like C++ and C#, as well as for musical keys, came from a post by Jonathan Rochking on his blog (http://bibwild.wordpress.com/2011/09/20/solr-indexing-c-c-etc/).

ReplyDeleteAnd we were committed to keeping them working partly because we had made the improvements just before the recent work on CJK. Being able to distinguish between C programming and C++ programming was well received, as well as C minor being distinct from C# minor. Thanks, Jonathan!